Neural 3D Mesh Renderer

CVPR 20183D Mesh Reconstruction

2D-to-3D Style Transfer

3D DeepDream

Highlights

- The first work on differentiable polygon mesh rendering for neural networks.

- The first work on deep image style transfer to 3D models.

- Honored with the NVIDIA Pioneering Research Award and having received over 1,000 citations.

Abstract

For modeling the 3D world behind 2D images, which 3D representation is most appropriate? A polygon mesh is a promising candidate for its compactness and geometric properties. However, it is not straightforward to model a polygon mesh from 2D images using neural networks because the conversion from a mesh to an image, or rendering, involves a discrete operation called rasterization, which prevents back-propagation. Therefore, in this work, we propose an approximate gradient for rasterization that enables the integration of rendering into neural networks. Using this renderer, we perform single-image 3D mesh reconstruction with silhouette image supervision and our system outperforms the existing voxel-based approach. Additionally, we perform gradient-based 3D mesh editing operations, such as 2D-to-3D style transfer and 3D DeepDream, with 2D supervision for the first time. These applications demonstrate the potential of the integration of a mesh renderer into neural networks and the effectiveness of our proposed renderer.

Results

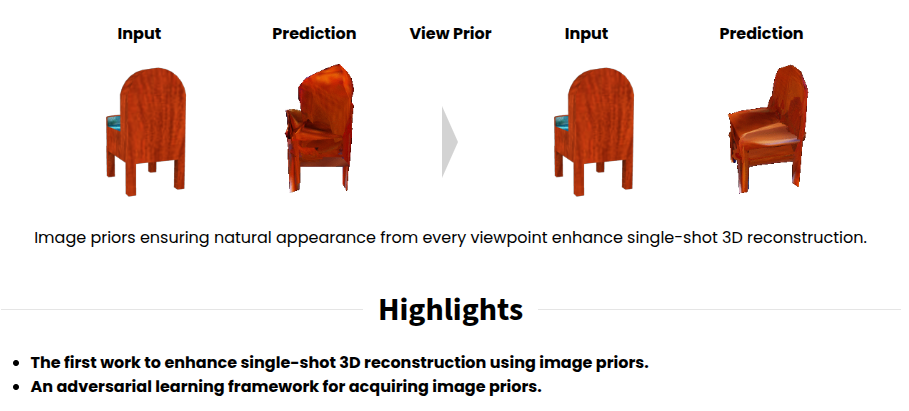

Single-image 3D reconstruction

Our rendering layer facilitates learning to generate a 3D mesh from a single image.

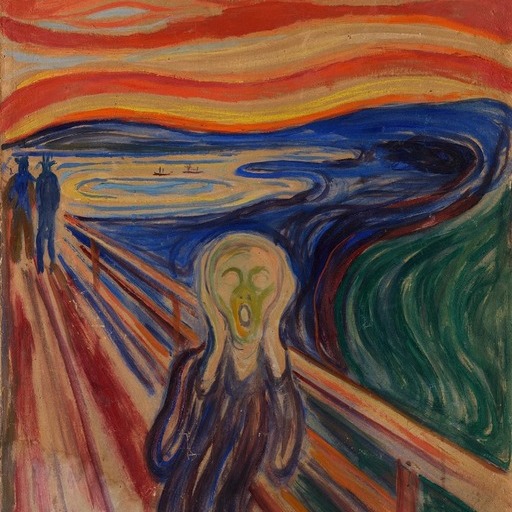

2D-to-3D style transfer

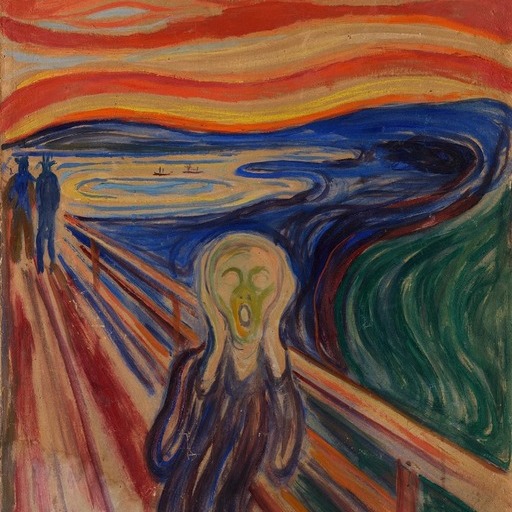

Painting styles can be transferred onto both textures and 3D shapes by our method. Check out how the outlines of the 3D models change to match the specified style.

The style images used are Thomson No. 5 (Yellow Sunset) (D. Coupland, 2011), The Tower of Babel (P. Bruegel the Elder, 1563), The Scream (E. Munch, 1910), and Portrait of Pablo Picasso (J. Gris, 1912).

3D DeepDream

We realize the 3D version of DeepDream.

Technical overview

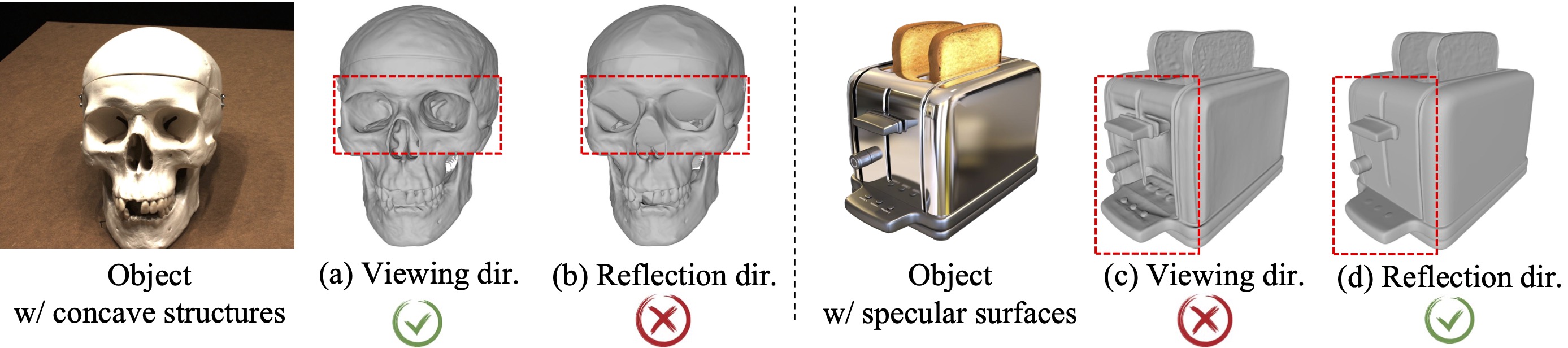

Understanding the 3D world from 2D images is one of the fundamental challenges in computer vision. Moreover, rendering (i.e., 3D-to-2D conversion) lies at the interface between the 3D world and 2D images. A polygon mesh is an efficient, rich, and intuitive 3D representation, which makes pursuing a "backward pass" for a 3D mesh renderer worthwhile.

Rendering cannot be directly integrated into neural networks because standard backpropagation cannot flow through the renderer. In this work, we propose an approximate gradient for rendering that enables end-to-end training of neural networks incorporating rendering.

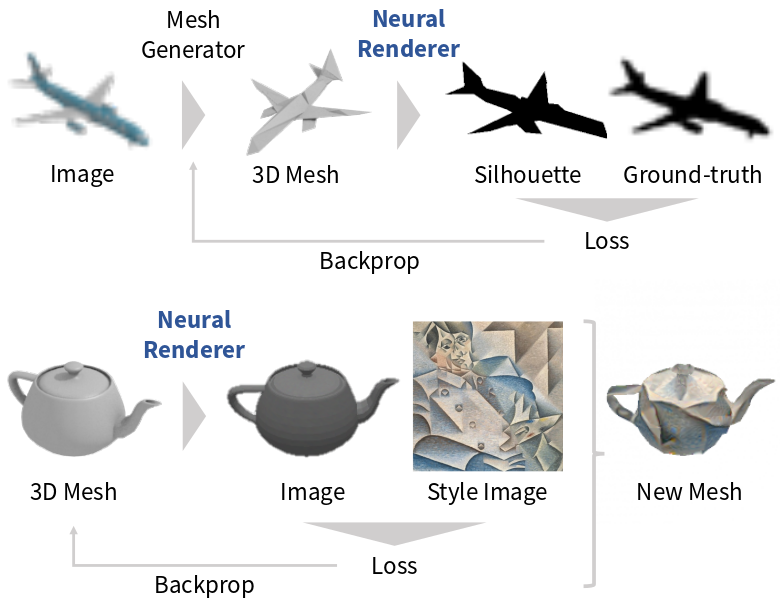

The applications demonstrated above were achieved using this renderer. The figure below illustrates the pipeline.

The 3D mesh generator was trained using silhouette images. During training, the generator minimizes the difference between the silhouettes of the reconstructed 3D shape and the ground-truth silhouettes.

2D-to-3D style transfer was achieved by optimizing the mesh’s shape and texture to minimize a style loss computed on the images. A similar approach was used for 3D DeepDream.

Both applications were implemented by transferring information from the 2D image space into 3D space through our renderer.

More details can be found in the paper.

Citation

@InProceedings{kato2018renderer,

title = {Neural 3D Mesh Renderer},

author = {Kato, Hiroharu and Ushiku, Yoshitaka and Harada, Tatsuya},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2018}

}

Relevant Projects

powered by Academic Project Page Template